Category: dcapi

Best Student Paper Award 2013 – WCE 2013

The conference paper entitled “Video Matching Using DC-image and Local Features ” presented earlier

in “World Congress on Engineering 2013“ got “Best Student Paper Award 2013”

well done and congratulations to Saddam Bekhet .

Conference paper presented in “ World Congress on Engineering 2013”

Saddam Bekhet presented his accepted paper in “World Congress on Engineering 2013“.

The paper title is “Video Matching Using DC-image and Local Features ”

Abstract:

This paper presents a suggested framework for video matching based on local features extracted from the DC-image of MPEG compressed videos, without decompression. The relevant arguments and supporting evidences are discussed for developing video similarity techniques that works directly on compressed videos, without decompression, and especially utilising small size images. Two experiments are carried to support the above. The first is comparing between the DC-image and I-frame, in terms of matching performance and the corresponding computation complexity. The second experiment compares between using local features and global features in video matching, especially in the compressed domain and with the small size images. The results confirmed that the use of DC-image, despite its highly reduced size, is promising as it produces at least similar (if not better) matching precision, compared to the full I-frame. Also, using SIFT, as a local feature, outperforms precision of most of the standard global features. On the other hand, its computation complexity is relatively higher, but it is still within the real-time margin. There are also various optimisations that can be done to improve this computation complexity.

Well done and congratulations to Saddam Bekhet .

New Conference paper Accepted to the “ World Congress on Engineering”: Video Matching Using DC-image and Local Features

New Conference paper Accepted to the “ World Congress on Engineering”

The paper title is “Video Matching Using DC-image and Local Features ”

Abstract:

This paper presents a suggested framework for video matching based on local features extracted from the DC-image of MPEG compressed videos, without decompression. The relevant arguments and supporting evidences are discussed for developing video similarity techniques that works directly on compressed videos, without decompression, and especially utilising small size images. Two experiments are carried to support the above. The first is comparing between the DC-image and I-frame, in terms of matching performance and the corresponding computation complexity. The second experiment compares between using local features and global features in video matching, especially in the compressed domain and with the small size images. The results confirmed that the use of DC-image, despite its highly reduced size, is promising as it produces at least similar (if not better) matching precision, compared to the full I-frame. Also, using SIFT, as a local feature, outperforms precision of most of the standard global features. On the other hand, its computation complexity is relatively higher, but it is still within the real-time margin. There are also various optimisations that can be done to improve this computation complexity.

Well done and congratulations to Saddam Bekhet .

PhD Postgraduate Seminar

Saddam Bekhet, member of the DCAPI group presented a short presentation about his PhD work “Video similarity in compressed domain” at the school of computer science postgraduate monthly seminar on 13/03/2013 .

Amjad Altadmri – PhD

Amjad Altadmri has passed his PhD viva, subject to minor amendments, earlier today.

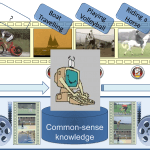

Thesis Title: “Semantic Video Annotation in Domain-Independent Videos Utilising Similarity and Commonsense Knowledgebases”

Thanks to the external, Dr John Wood from the University of Essex, the internal Dr Bashir Al-Diri and the viva chair, Dr Kun Guo.

Congratulations and Well done.

All colleagues are invited to join Amjad on celebrating his achievement, tomorrow (Thursday 28th Feb) at 12:00noon, in our meeting room MC3108, with some drinks and light refreshments available.

Best wishes.

OpenCV workshop

Saddam Bekhet, member of the DCAPI group demonstrated workshop about Using OpenCV with Visual Studio 2010 Express edition on 23/01/2013 . In addition a demonstration about basic OpenCV operations (loading & manipulating images) and advanced operations (face detector & tracker from live camera stream) was demonstrated.

Face detection example link

http://opencv.willowgarage.com/wiki/FaceDetection

Summary of installation settings of OpenCV :

1- Download OpenCV files http://sourceforge.net/projects/opencvlibrary/files/opencv-win/2.4.0/

2- Download CMAKE http://cmake.org/cmake/resources/software.html

3- Use CMAKE to generate OpenCV library AND DLL’s and use x64 architecture or the architecture what ever suites you in VS2010

4-Open OpenCV build folder and search for “OpenCV.sln” then compile it.

5- Remember to but openCV DLL’s beside The Debug \EXEDirectory of your project in Visual Studio

6- Rememeber to install intel threading block http://threadingbuildingblocks.org/ and put the DLL called tbb.dll in your visual studio debug\EXE

Include direcories in VS2010 Project–>Properities–>VC++ Directories

D:\OpenCV\build\include

D:\OpenCV\build\include\opencv

Library direcories in VS2010 Project–>Properities–>Linker–>Input

D:\opencv\build\x64\vc10\lib

Linker–> Input

opencv_core242X.lib opencv_imgproc242X.lib opencv_highgui24X.lib opencv_ml242X.lib opencv_video242X.lib opencv_features2d2X.lib opencv_features2d2X.lib opencv_calib3d242X.lib opencv_objdetect242X.lib opencv_contrib242X.lib

opencv_legacy242X.lib opencv_flann242X.lib opencv_nonfree242X.lib

Make sure to reblace “X” in the previous file names with correct naming of the generated OpenCV library files for example on my machine it is “opencv_nonfree242d.lib”

New Journal paper Accepted to the “Multimedia Tools and Applications”

New Journal paper accepted for publishing in the Journal of “Multimedia Tools and Applications“.

The paper title is “A Framework for Automatic Semantic Video Annotation utilising Similarity and Commonsense Knowledgebases”

Abstract:

The rapidly increasing quantity of publicly available videos has driven research into developing automatic tools for indexing, rating, searching and retrieval. Textual semantic representations, such as tagging, labelling and annotation, are often important factors in the process of indexing any video, because of their user-friendly way of representing the semantics appropriate for search and retrieval. Ideally, this annotation should be inspired by the human cognitive way of perceiving and of describing videos. The difference between the low-level visual contents and the corresponding human perception is referred to as the ‘semantic gap’. Tackling this gap is even harder in the case of unconstrained videos, mainly due to the lack of any previous information about the analyzed video on the one hand, and the huge amount of generic knowledge required on the other.

This paper introduces a framework for the Automatic Semantic Annotation of unconstrained videos. The proposed framework utilizes two non-domain-specific layers: low-level visual similarity matching, and an annotation analysis that employs commonsense knowledgebases. Commonsense ontology is created by incorporating multiple-structured semantic relationships. Experiments and black-box tests are carried out on standard video databases for

action recognition and video information retrieval. White-box tests examine the performance of the individual intermediate layers of the framework, and the evaluation of the results and the statistical analysis show that integrating visual similarity matching with commonsense semantic relationships provides an effective approach to automated video annotation.

Well done and congratulations to Amjad Altadmri .

Two Presentations and Posters in the Vision & Language Network workshop

Three members of the Lincoln School of Computer Science, and the DCAPI group, have attended the Vision & Language (V&L) Network workshop, 13-14th Dec. 2012 in Sheffield, UK.

Amr Ahmed, Amjad Al-tadmri and Deema AbdalHafeth attended the event, where Amjad and Deema delivered 2 oral presentations and 2 posters about their research work:

| 1. VisualNet: Semantic Commonsense Knowledgebase for Visual Applications |

| 2. Investigating text analysis of user-generated contents for health related applications |

Abstracts are available on ( http://www.vlnet.org.uk/VLW12/VLW-2012-Accepted-Abstracts.html)

Congratulations for all involved.

The event included tutorial sessions (Vision for language people, and language for vision people). We had an increased presence this year.

Last year, we had a good presence in the last year’s workshop (http://amrahmed.blogs.lincoln.ac.uk/2011/09/19/vl-network-workshop-brighton/), had good discussions and useful feedback on the presented work.

Looking forward for similar, if not even better, experience this year.

Best wishes for the presentations.

Amr attended the ECM & workshop of the SUS-IT project

Amr Ahmed attended the ECM (Executive Committee Meeting) of his SUS-IT project (http://sus-it.lboro.ac.uk/) at Loughborough, and participated in the 2 days workshop for the project. This is one of the very important meetings, especially towards the late stage of the project, with all workpackages represented.